While picking up some books for my dissertation from the science and engineering library, I stumbled across an history book that sounded interesting: When Physics Became King. I enjoy it a lot so far, and hope to remember it, so writing about it seems useful. I also think it brings up some interesting ideas to relate to modern debates, so blogging about the book seems even more useful.

Some recaps and thoughts, roughly in the thematic order the book presents in the first three chapters:

- It’s worth pointing out how deeply tied to politics natural philosophy/physics was as it developed into a scientific discipline in the 17th-19th centuries. We tend to think of “science policy” and the interplay between science and politics as a 20th century innovation, but the establishment of government-run or sponsored scientific societies was a big deal in early modern Europe. During the French Revolution, the Committee of Public Safety suppressed the old Royal Academy and the later establishment of the Institut Nationale was regarded as an important development for the new republic. Similarly, people’s conception of science was considered intrinsically linked to their political and often metaphysical views. (This always amuses me when people hope science communicators like Neil deGrasse Tyson or Bill Nye should shut up, since the idea of science as something that should influence our worldviews is basically as old as modern science.)

- Similarly, science was considered intrinsically linked to commerce, and the desire was for new devices to better reflect the economy of nature by more efficiently converting energy between various forms. I also am greatly inspired by the work of Dr. Chanda Prescod-Weinstein, a theoretical physicist and historian of science and technology on this. One area that Morus doesn’t really get into is that the major impetus for astronomy during this time is improving celestial navigation, so ships can more efficiently move goods and enslaved persons between Europe and its colonies (Prescod-Weinstein discusses this in her introduction to her Decolonizing Science Reading List, which she perennially updates with new sources and links to other similar projects). This practical use of astronomy is lost to most of us in modern society and we now focus on spinoff technology when we want to sell space science to public, but it was very important to establishing astronomy as a science as astrology lost its luster. Dr. Prescod-Weinstein also brings up an interesting theoretical point I didn’t consider in her evaluation of the climate of cosmology, and even specifically references When Physics Became King. She notes that the driving force in institutional support of physics was new methods of generating energy and thus the establishment of energy as a foundational concept in physics (as opposed to Newton’s focus on force) may be influenced by early physics’ interactions with early capitalism.

- The idea of universities as places where new knowledge is created was basically unheard of until late in the 1800s, and they were very reluctant to teach new ideas. In 1811, it was a group of students (including William Babbage and John Herschel) who essentially lead Cambridge to a move from Newtonian formulations of calculus to the French analytic formulation (which gives us the dy/dx notation), and this was considered revolutionary in both an intellectual and political sense. When Carl Gauss provided his thoughts on finding a new professor at the University of Gottingen, he actually suggested that highly regarded researchers and specialists might be inappropriate because he doubted their ability to teach broad audiences.

- The importance of math in university education is interesting to compare to modern views. It wasn’t really assumed that future imperial administrators would use calculus, but that those who could learn it were probably the most fit to do the other intellectual tasks needed.

- In the early 19th century, natural philosophy was the lowest regarded discipline in the philosophy faculties in Germany. It was actually Gauss who helped raise the discipline by stimulating research as a part of the role of the professor. The increasing importance of research also led to a disciplinary split between theoretical and experimental physics, and in the German states, being able to hire theoretical physicists at universities became a mark of distinction.

- Some physicists were allied to Romanticism because the conversion of energy between various mechanical, chemical, thermal, and electrical forms was viewed as showing the unity of nature. Also, empiricism, particularly humans directly observing nature through experiments, was viewed as a means of investigating the mind and broadening experience.

- The emergence of energy as the foundational concept of physics was controversial. One major complaint was that people have a less intuitive conception of energy than forces, which are considered a lot. Others objected that energy isn’t actually a physical property, but a useful calculational tool (and the question of what exactly energy is still pops up in modern philosophy of science, especially in how to best explain it). The development of theories of luminiferous (a)ether are linked a bit to this as an explanation of where electromagnetic energy is – ether theories suggested the ether stored the energy associated with waves and fields.

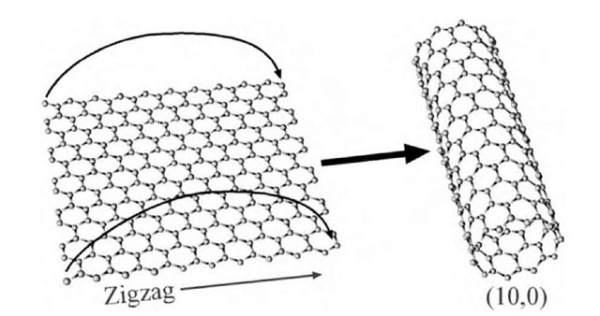

Some of the different ways a nanotube can be rolled up. The numbers in parentheses are the “chiral vector” of the nanotube and determine its diameter and electronic properties.

Some of the different ways a nanotube can be rolled up. The numbers in parentheses are the “chiral vector” of the nanotube and determine its diameter and electronic properties.